Exploring 3D Elements for XR

Group project at university.

Team

- Supervisor

- 4 developers

- 4 designers (including me)

Goal

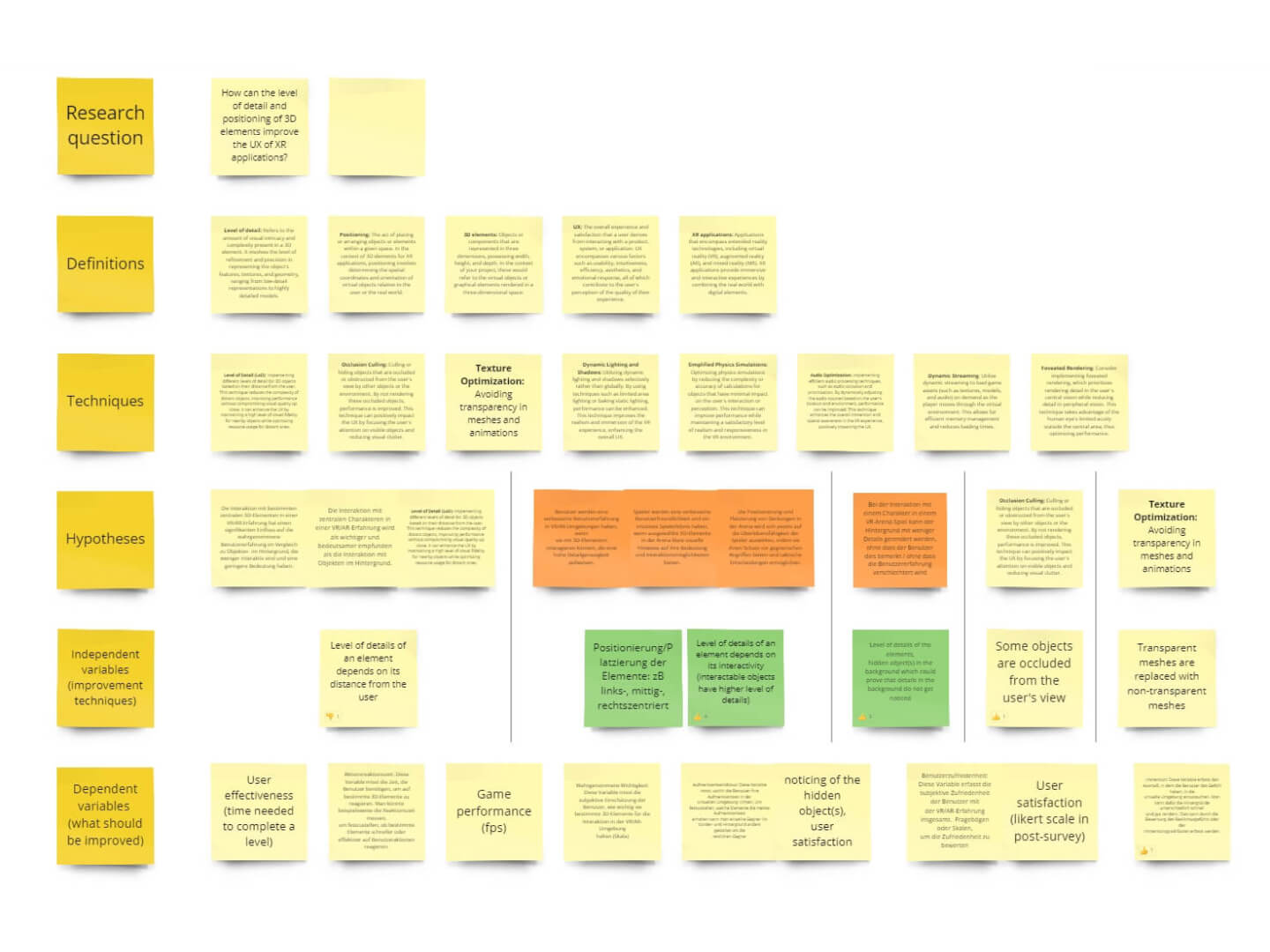

- Research how can the level of detail (LOD) and positioning of elements improve the user experience of an XR application

Tasks

- Research existing state-of-the-art tools

- Design and implement a basic AR/VR application

- Run user tests and analyze the test data

Summary

In the summer semester of 2023, I participated in an interdisciplinary group project at the Quality and Usability Lab of the Technical University of Berlin. Considering the current literature, we hypothesized, that interactive game objects are more relevant for the user experience than non-interactive. To test our hypothesis, we developed a VR survival game in Unreal Engine and categorized all its assets into interactive and non-interactive. The former always had the same LOD in the game, while the LOD of the latter was decreasing with each level. Based on the questionnaire responses, the average game satisfaction was 3.7/5, and only 2 out of 53 participants noticed the difference in LOD of non-interactive objects. The game even got increasingly more enjoyable with each round in spite of declining asset quality. Based on that, we can say that the user experience was not impacted by the decreased LOD of non-interactive objects, and our hypothesis was proven.

Background

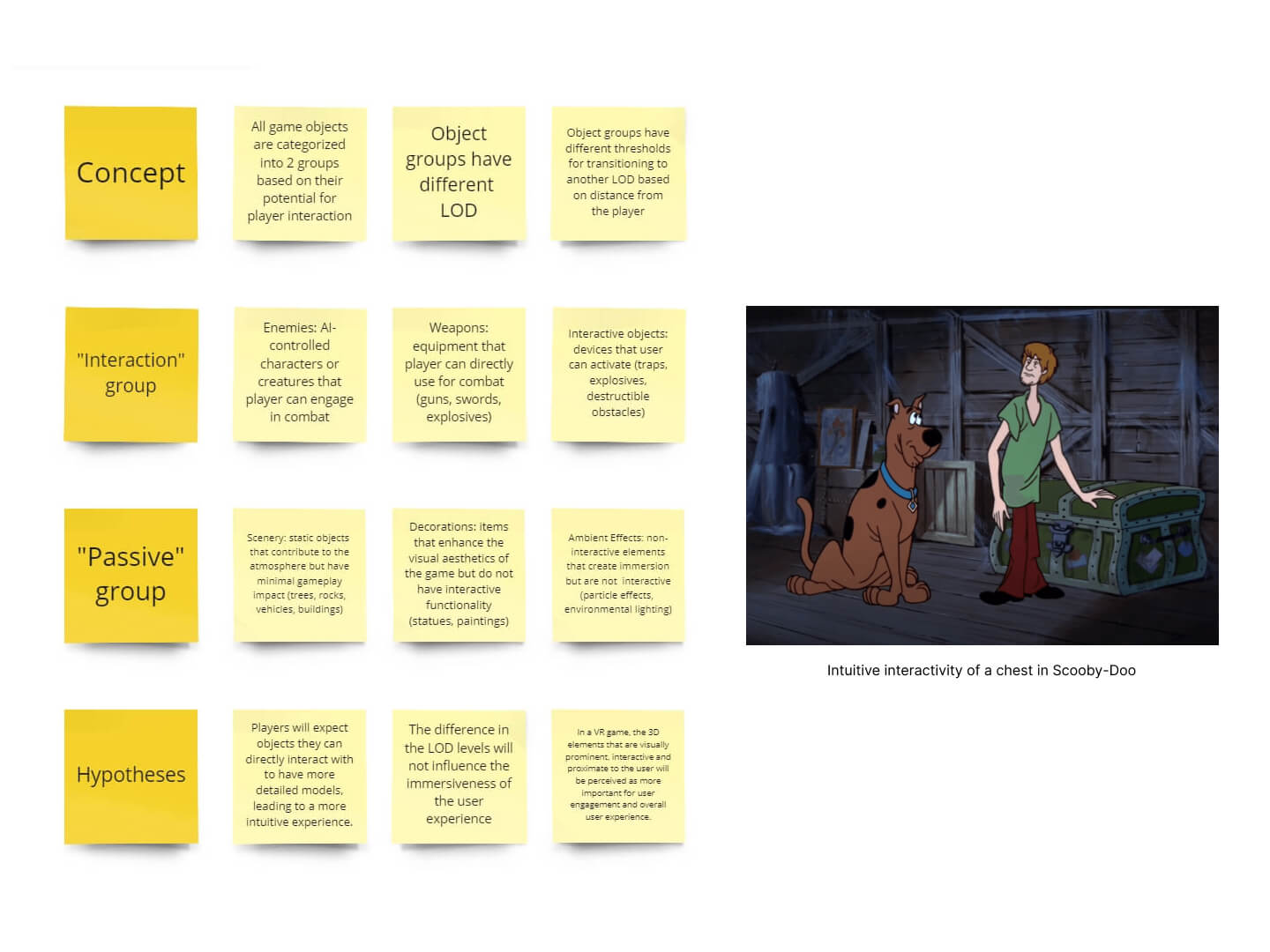

In the summer semester of 2023, together with seven other students, I participated in an interdisciplinary media project at the Quality and Usability Lab of the Technical University of Berlin. Our topic was “Exploring 3D Elements for XR applications – How can the level of detail (LOD) and positioning of elements improve the UX?” The idea was to reduce the LOD of certain 3D objects in the game to boost the performance and visually show the significance of game objects to the player.

Research

First, we defined that LOD approaches reduce the number of polygons by selecting an appropriate instance of polygon complexity for each model depending on its importance, thus saving resources. If the object is insignificant or far away at a given moment, the system switches to a stored, simplified version of the object. In addition to a reduction of polygons, quality-reduced lighting or low-resolution textures can also change the LOD (Buhr et al., 2013).

One approach is only to render the central circle, the foveal zone, with a high level of detail since the human vision is limited to 135° vertically and 160° horizontally, and fine details are only perceived within a central circle of 5° (Guenter et al., 2012). Watson et al. (1997) demonstrated that peripheral resolution could be diminished by nearly 50 percent with no significant decline in performance. These limited resources of vision, in addition to the limited resources of attention, can be used by modifying the level of detail of objects in the game to achieve lighter applications. However, such gaze-based LOD management faces big challenges when it comes to updating the display after rapid eye saccades without artifacts (Kouliers et al., 2014). Anticipating the eye movements is difficult, because in addition to low-level image features such as luminance, contrast, and movement, higher-level cognitive phenomena also attract attention and are much harder to predict. Unfortunately, our team was not provided with eye-tracking hardware and software to research this approach further.

Instead, we focused on the object’s importance, which according to Reddy (1998) can be determined by attention deployment over the scene and perceptually motivated criteria: distance, size, eccentricity, and velocity of objects. The amount of criteria regarding object importance makes it complex to change the LOD at the right moment. It has already been shown that eye movement patterns differ among different game genres. Eye-tracking data from an experiment with 3D video games by El-Nasr and Yan (2006) show that players in first-person shooter games pay attention mainly to the center of the screen, where the cross of the gun is located, while the player’s visual pattern in action-adventure games covers the whole screen. In both genres however bottom-up visual features, including color contrast and movement, can subconsciously trigger the visual attention process.

Test Design

Guided by our research, we decided to investigate further the distribution of attention in an extended reality environment. We hypothesized that the object’s interactivity and positioning draw the user’s visual attention. So non-interactive and distant objects can be rendered with lower quality, positively impacting the game performance and visually indicating their significance. From this point of view, our first hypothesis evolved: “If non-interactive and distant objects have a lower level of detail than interactive and proximate objects, it will result in a better game performance and better user experience.” This hypothesis had two independent variables: “level of detail of non-interactive objects” and “level of detail of distant objects” as well as two dependent variables: “game performance” and “user experience.”

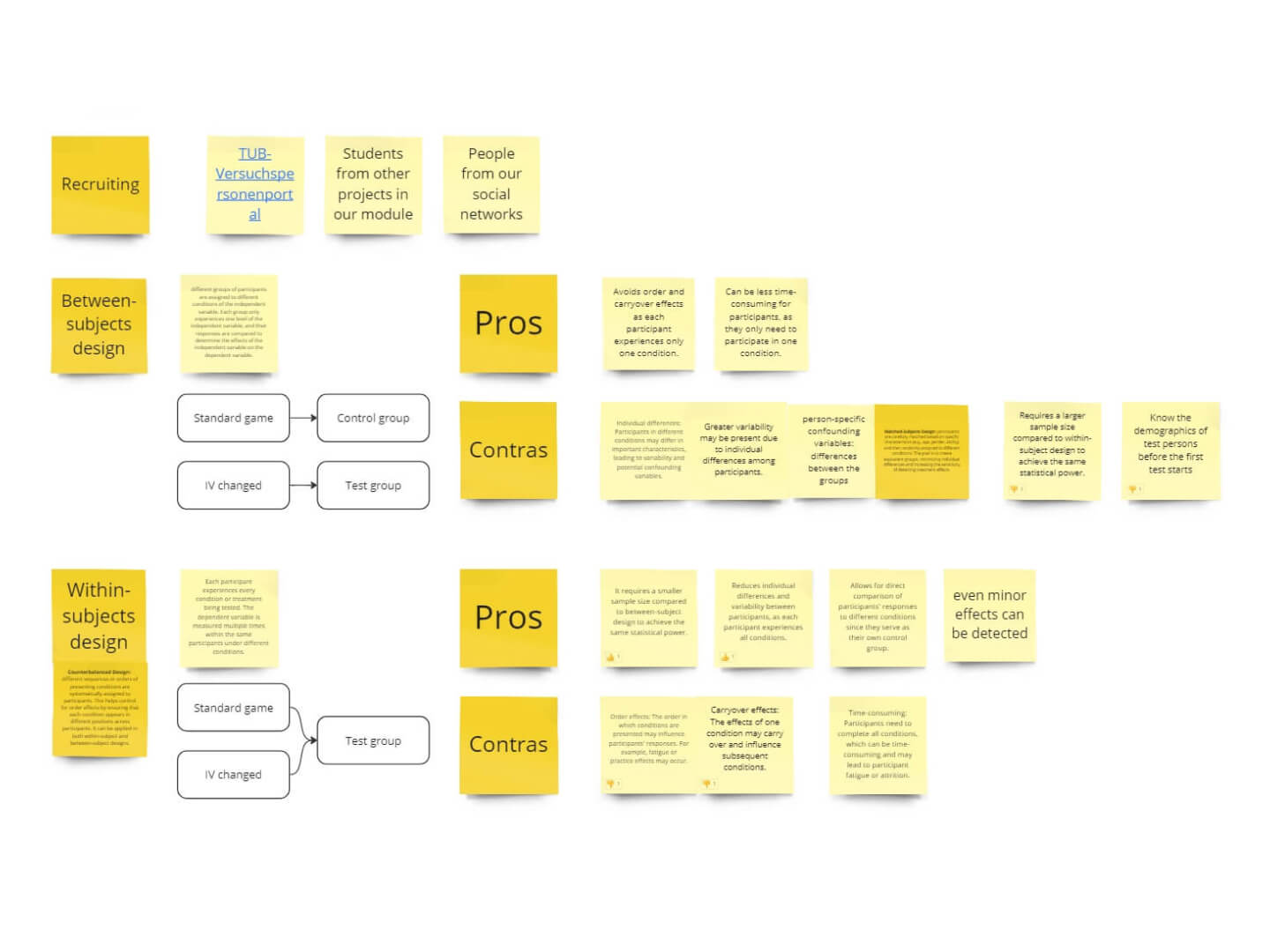

Given the relatively short time available for the project, we decided on a within-subjects test design because we could not predict whether we would find enough participants to make an empirical conclusion. While we were creating the test plan, we realized that testing both the object interactivity and object proximity would require doubling the game time for our participants. This would cause player fatigue, a decrease in overall attention, and inaccuracy of test results. Additionally, two independent variables could interfere with each other and distort the test data. Therefore, we decided to only focus on the interactive objects and abandoned the proximity aspect. Our final hypothesis was therefore “If non-interactive objects have a lower level of detail than interactive objects, it will result in a better game performance and better user experience.” It only had one independent variable: “level of detail of non-interactive objects.”

The user experience had to be evaluated based on a carefully designed questionnaire of two parts. The pre-game part had questions about demographics, limitations, general game experience, and general VR experience. The post-game part had questions about the user experience, user engagement, and perceived differences in object resolution. We didn’t have a technical possibility to determine the difference in game performance in each test session, so we conducted a separate session to measure the FPS of the same game objects of different LOD...

Questionnaire in Google Forms

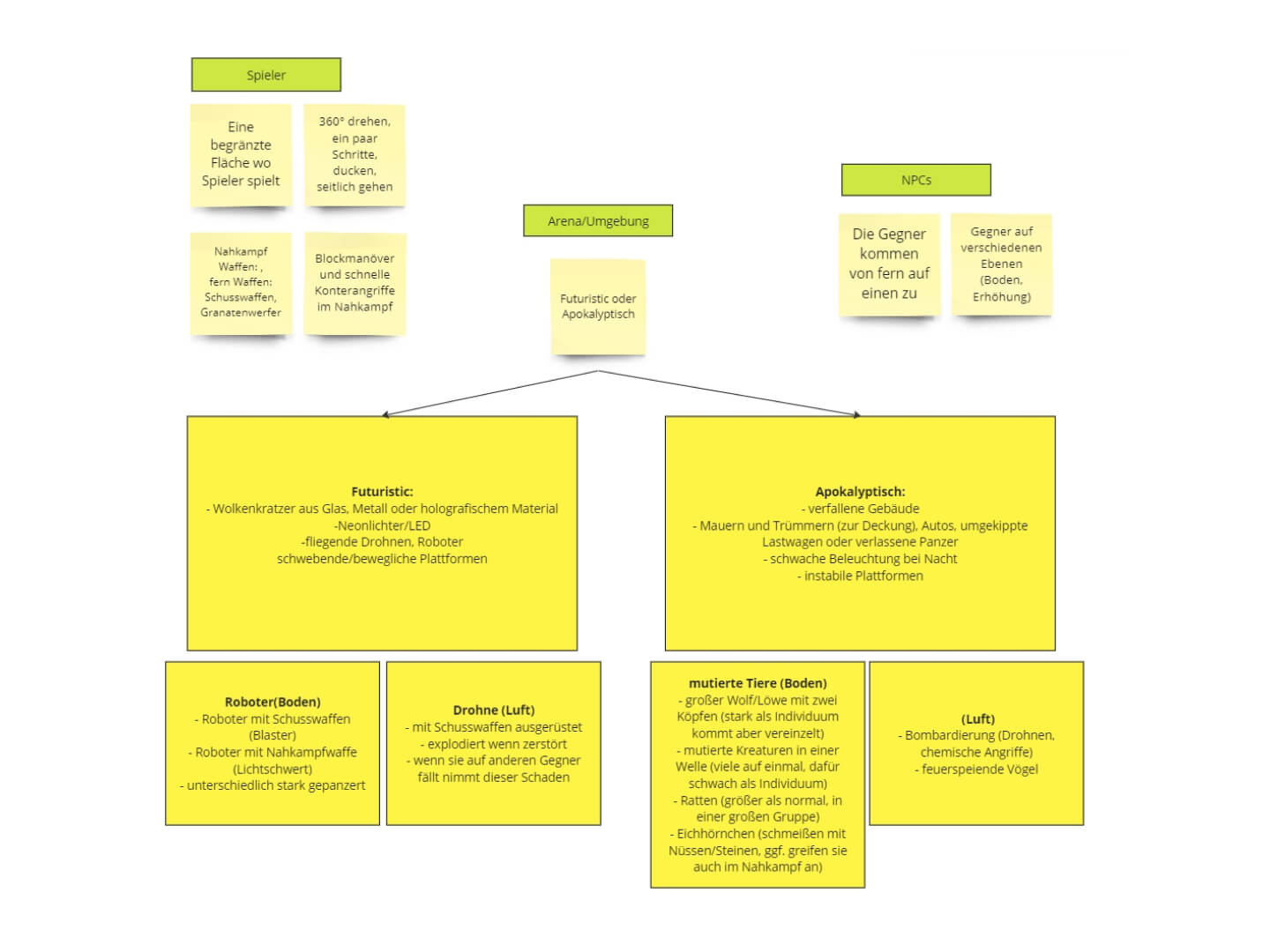

Game Design

When the hypothesis and variables were set, we had to develop a suitable game for testing our hypothesis. First, we liked the idea of an escape room because of the variety of interactive and non-interactive objects. However, we didn’t want to make the user guess which objects are interactive and which are not. Also, we wanted to reduce effort for our developers and game complexity for the inexperienced participants by minimizing teleportation, tuning, and grabbing. That’s why we have decided to create a simple arena shooter game with weapons, enemies, and destroyable objects.

Before starting with a concept, all designers had a research meeting where each put on a Meta Quest 2 headset and tried out several VR shooter games. Among those were First Steps, Superhot, Vader Immortal III, Population: ONE, Propagation VR, and others. Our goal was to explore the subtleties of VR arena shooters and get inspiration for our future game. We decided to go with a sci-fi theme because Quixel, UE Marketplace, and other asset stores had a lot of free models, textures, and environments in that style that we didn’t have to create ourselves. We divided all imported assets into interactive (weapons, enemies, destructible walls, and barrels) and non-interactive (everything else: decorations). The latter received several variations with decreased LOD and texture quality for testing.

The game was set in a warehouse to reduce the space to be rendered. Melee and ranged combat enemies spawn from different directions in the air and on the ground, come to the center of the arena, and try to damage the player. The player stands in the middle of the arena and tries to survive by killing all enemies and destroying objects using blasters as a melee weapon and a knife as a ranged combat weapon. We included both melee and ranged combat in the game to diversify the viewing distances. The game had four enemy waves. As each subsequent wave of enemies progressed, the level of detail of the non-interactive objects was reduced while the level of detail of the interactive objects stayed the same.

Mid-presentation

In the middle of the semester, we had to report our progress to the supervisor in the form of a presentation. Each team member took responsibility for presenting a part of a project. My part was about test design.

Mid-semester presentation

Testing

Our supervisor provided us with a test room in the TEL skyscraper of the campus of Technical University Berlin equipped with a powerful PC, laptop, Meta Quest 2, fans, and a protected area for playing VR games. To create the same test conditions for all its participants, we compiled a list of necessary actions during the test and conducted several trial tests. They also helped us to identify crucial game bugs, polish the test procedure, and estimate time for test preparation and test duration. Before each test, a team member had to take the room key, set up the PC with VR glasses for game sessions, the laptop with paper consent forms for filling out the questionnaire, fans for cooling down the room, and water with glasses for participants. When a participant arrived, a team member had to greet them, let them fill out the consent form with the pre-game part of the questionnaire, disinfect the VR glasses, tune the VR glasses for them, explain how to play the game, let play 4 waves of the game, let fill out the post-game part of the questionnaire, thank and say goodbye to them.

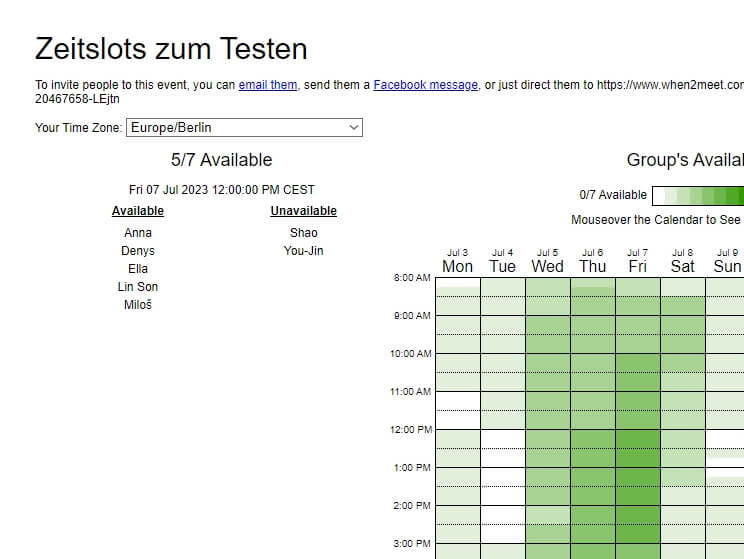

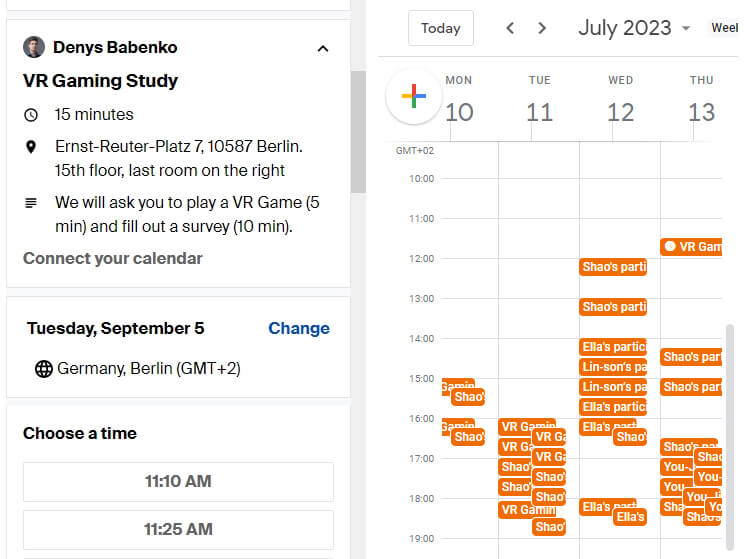

Having the availability of the test room and approximate test time, we started creating a system that would allow participants to book the time slots that are visible to the team members. First, we tried to use When2meet. It was a convenient website for showing the time slots, but not responsive and as a result not usable on mobile devices. The free version of Calendly didn’t have a needed event type for our tests. Eventually, a combination of Doodle and Google Calendar worked well. Doodle was convenient for participants to book time slots and shared Google Calendar for the team to see the booked time slots live. To recruit participants, the members of our team mostly used their social networks. We also asked the supervisor to send a test invitation email to other students of the “Advanced Projects at the Quality and Usability Lab” module. In exchange for their time, we participated in tests for their projects.

Test results

For collecting the questionnaire responses we used a Google Sheets document that Google Forms automatically created. The collected data has been processed and reformatted into an informative form. The results show that player satisfaction was not impacted despite reducing the polygon count of non-interactive objects up to 96% and their textures up to 99,6% of pixels. The average game satisfaction was 3.8/5 and even increased with each round, most engaging objects were interactive objects (98,1%), and only 2 out of 53 participants noticed the differences in the quality of the same game objects. So our hypothesis “In a virtual reality survival game, the 3D elements that are interactive and proximate to the user are visually prominent and will be perceived as more important for user engagement and overall user experience” was proven.

Questionnaire responses in informative form

Outcome

At the end of the semester, we had to present the project outcome in a presentation and write a report. Each team member prepared a part of a final presentation and wrote a part of the final report. I was presenting the test design and writing about the project results. The final grade for every team member was 1.3 (where 1 is the best grade and 6 is the worst).

Final Presentation

Final Report

Reflection

It is important to consider the generalizability of the findings. As our time for this project was limited to just one semester, we have only created a simple VR game with the necessary elements for testing the hypothesis. The obtained results may be specific to the particular genre of VR arena shooter games and the specific set of interactive and non-interactive objects we chose for the study. The testing period was limited to two weeks with the first few days allocated for setting up the testing environment and troubleshooting the game. The test participant’s sample was not statistically significant or representative of the general XR audience. The tests took place in a controlled and simulated environment for the players which may not fully have captured the complexities of real-world user experiences in VR games.

The validity and reliability of results could be improved by using a larger and more diverse group of participants of more different ages, occupations, abilities, and levels of VR experience. Conducting user tests with a between-subject design and having a control group might also lead to more specific results. The different VR game genres could be tested on different VR platforms to assess how different games perform across various hardware configurations. Additionally to the questionnaire, more objective data could be collected during the test sessions using eye-tracking, body-tracking, and other comparative metrics such as response time, frame rate, completion time, and others. It could provide a more comprehensive understanding of the impact of different LOD levels on system performance.

Despite these limitations, this project can still contribute as a starting point for further research and optimization efforts in improving the UX of XR applications. The overall results found during the evaluation as well as the prototype open the door to exciting possibilities for future research and development. Further investigations based on our findings could delve into the thresholds of visual perception in interactive scenarios. Exploring the limits at which users detect changes in asset quality while immersed in dynamic experiences could provide more precise guidelines for resource allocation. Also conducting studies on intuitive understanding of object interactivity based on its visual quality could give insight for improving user experience. Studies with other VR experiences across diverse genres could provide insights into the generalizability of our findings and reveal whether the observed trends are true across different types of virtual environments and interactive scenarios.

References

- Buhr, M., Pfeiffer, T., Reiners, D., Cruz-Neira, C., & Jung, B. (2013). Echtzeitaspekte von VR-Systemen. In Dörner, R., Broll, W., Grimm, P., & Jung, B. (Ed.) Virtual und Augmented Reality (VR/AR). 195-238. Springer Vieweg. DOI 10.1007/978-3-642-28903-3

- El-Nasr, M. S., & Yan, S. (2006). Visual attention in 3D video games. Association for Computing Machinery, New York, NY, USA, 22–es. https://doi.org/10.1145/1178823.1178849

- Guenter, B., Finch, M., Drucker, S., Tan, D., & Snyder, J. (2012). Foveated 3D graphics. ACM Trans. Graph. 31, 6, Article 164 (November 2012), 10 pages. https://doi.org/10.1145/2366145.2366183

- ISO Standard 9241, part 210. (2010). Ergonomics of Human-system Interaction – part 210: Human-centred Design for Interactive Systems (Formerly Known as 13407) International Organization for Standardization. Genf

- Koulieris, G. A., Drettakis, G., Cunningham, D. & Mania, K. (2014). C-LOD: Contextaware Material Level-of-Detail applied to Mobile Graphics. Computer Graphics Forum, 33: 41-49. https://doi.org/10.1111/cgf.12411

- Reddy, M. (1998). Specification and evaluation of level of detail selection criteria. Virtual Reality 3, 132–143. Springer Verlag. https://doi.org/10.1007/BF01417674

- Watson, B., Walker, N., Hodges, L. F., & Worden, A. (1997). Managing level of detail through peripheral degradation: effects on search performance with a head-mounted display. ACM Trans. Comput.-Hum. Interact. 4 (December), 323– 346